In Week 1 we looked at ingesting S3 data, now it’s time to take that a step further. So this week we’ve got a short list of tasks for you all to do.

The basics aren’t earth-shattering but might cause you to scratch your head a bit once you start building the solution.

Frosty Friday Inc., your benevolent employer, has an S3 bucket that was filled with .csv data dumps. These dumps aren’t very complicated and all have the same style and contents. All of these files should be placed into a single table.

However, it might occur that some important data is uploaded as well, these files have a different naming scheme and need to be tracked. We need to have the metadata stored for reference in a separate table. You can recognize these files because of a file inside of the S3 bucket. This file, keywords.csv, contains all of the keywords that mark a file as important.

Objective:

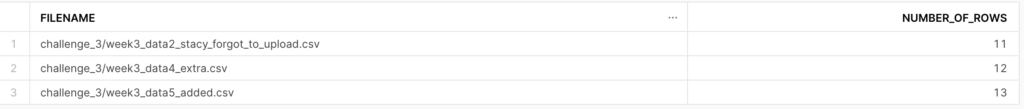

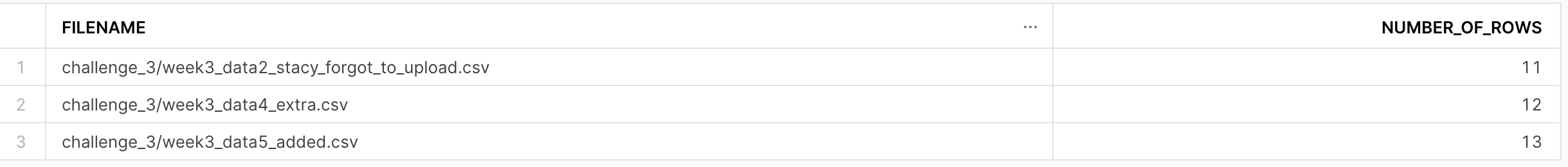

Create a table that lists all the files in our stage that contain any of the keywords in the keywords.csv file.

The S3 bucket’s URI is: s3://frostyfridaychallenges/challenge_3/

Result:

Your result should look like:

Remember if you want to participate:

- Sign up as a member of Frosty Friday. You can do this by clicking on the sidebar, and then going to ‘REGISTER‘ (note joining our mailing list does not give you a Frosty Friday account)

- Post your code to GitHub and make it publicly available (Check out our guide if you don’t know how to here)

- Post the URL in the comments of the challenge.

If you have any technical questions you’d like to pose to the community, you can ask here on our dedicated thread.

30 responses to “Week 3 – Basic”

-

Nice challenge. Took the chance to create the tables using templates based on the CSV files:

https://github.com/marioveld/frosty_friday/tree/main/ffw3

- Solution URL – https://github.com/marioveld/frosty_friday/tree/main/ffw3

-

🙂

- Solution URL – https://github.com/darylkit/Frosty_Friday/blob/main/Week%203%20-%20Metadata%20Queries/metadata_Queries.sql

-

‘LIKE ANY’ is Basic, but Useful.

My code is in Japanese.- Solution URL – https://app.snowflake.com/oghptkp/et79103/w2bUPQqmFerC/query

-

‘LIKE ANY’ is Basic, but Useful.

My code is in Japanese.(One previous comment had the wrong Git URL.)

- Solution URL – https://github.com/tomoWakamatsu/FrostyFriday/blob/main/FrostyFriday-Week3.sql

-

Not sure it is the most straightforward approach, but using scripting you can nicely filter the staged files out! I learned a lot 🙂

- Solution URL – https://github.com/marco-scatassi-nimbus/Frosty-Friday/blob/main/week3/load_filtering_using_metadata.sql

-

Really quick solution, what do you think about?

- Solution URL – https://github.com/marco-pastore-HH/Frosty-Friday-Challenges/blob/main/ff_challenge_3.sql

-

This is my version of the solution for this task. I hope you find it helpful! ^^

- Solution URL – https://github.com/GerganaAK/FrostyFridays/blob/main/Week%203%20%E2%80%93%20Basic

Leave a Reply

You must be logged in to post a comment.