A stakeholder in the HR department wants to do some change-tracking but is concerned that the stream which was created for them gives them too much info they don’t care about.

Load in the parquet data and transform it into a table, then create a stream that will only show us changes to the DEPT and JOB_TITLE columns.

You can find the parquet data here.

Execute the following commands:

UPDATE <table_name> SET COUNTRY = 'Japan' WHERE EMPLOYEE_ID = 8;

UPDATE <table_name> SET LAST_NAME = 'Forester' WHERE EMPLOYEE_ID = 22;

UPDATE <table_name> SET DEPT = 'Marketing' WHERE EMPLOYEE_ID = 25;

UPDATE <table_name> SET TITLE = 'Ms' WHERE EMPLOYEE_ID = 32;

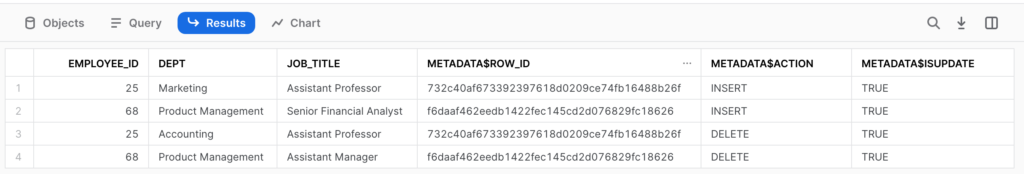

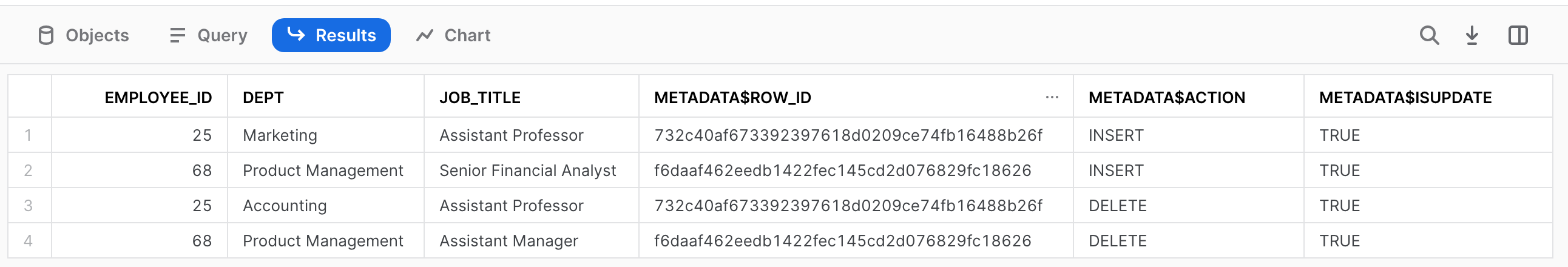

UPDATE <table_name> SET JOB_TITLE = 'Senior Financial Analyst' WHERE EMPLOYEE_ID = 68;The result should look like this:

- Sign up as a member of Frosty Friday. You can do this by clicking on the sidebar, and then going to ‘REGISTER‘ (note joining our mailing list does not give you a Frosty Friday account)

- Post your code to GitHub and make it publicly available (Check out our guide if you don’t know how to here)

- Post the URL in the comments of the challenge.

If you have any technical questions you’d like to pose to the community, you can ask here on our dedicated thread.

30 responses to “Week 2 – Intermediate”

-

I used an external stage and INFER_SCHEMA() for the Parquet file:

https://github.com/marioveld/frosty_friday/tree/main/ffw2

Fun challenge!

- Solution URL – https://github.com/marioveld/frosty_friday/tree/main/ffw2

-

curve ball with the column selection

- Solution URL – https://github.com/NMangera/frosty_friday/blob/main/wk%202%20-%20intermediate%20/streams

-

I used an internal stage and upload a file from UI, and infer_schema().

- Solution URL – https://github.com/taksho/Frosty_Friday/blob/main/Week2/stream.sql

-

🙂

- Solution URL – https://github.com/darylkit/Frosty_Friday/blob/main/Week%202%20-%20Streams/streams.sql

-

Used internal stage and infer_schema() (infer_schema was new to me)

- Solution URL – https://github.com/Nardeepm/Frosty_Friday/blob/main/Week%202%20Frosty%20Friday.sql

-

I use infer_schema(Schema Detection).It’s very useful!

My code Comments is in Japanese.- Solution URL – https://github.com/tomoWakamatsu/FrostyFriday/blob/main/FrostyFriday-Week2.sql

-

I’m translating the Japanese text of the Challenge.

The first solution involves using INFER_SCHEMA.

The second solution doesn’t utilize INFER_SCHEMA; instead, it relies on an older method, which proved to be quite challenging.- Solution URL – https://github.com/gakut12/Frosty-Friday/blob/main/week2_intermediate_streams/week2.sql

Leave a Reply

You must be logged in to post a comment.