A stakeholder in the HR department wants to do some change-tracking but is concerned that the stream which was created for them gives them too much info they don’t care about.

Load in the parquet data and transform it into a table, then create a stream that will only show us changes to the DEPT and JOB_TITLE columns.

You can find the parquet data here.

Execute the following commands:

UPDATE <table_name> SET COUNTRY = 'Japan' WHERE EMPLOYEE_ID = 8;

UPDATE <table_name> SET LAST_NAME = 'Forester' WHERE EMPLOYEE_ID = 22;

UPDATE <table_name> SET DEPT = 'Marketing' WHERE EMPLOYEE_ID = 25;

UPDATE <table_name> SET TITLE = 'Ms' WHERE EMPLOYEE_ID = 32;

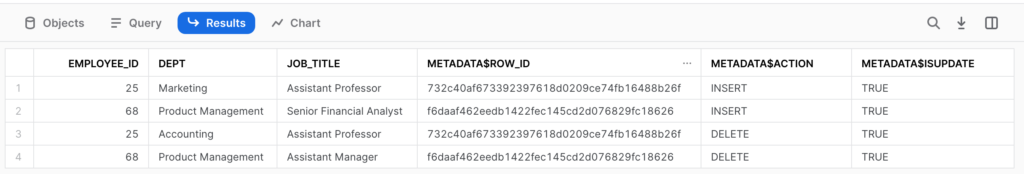

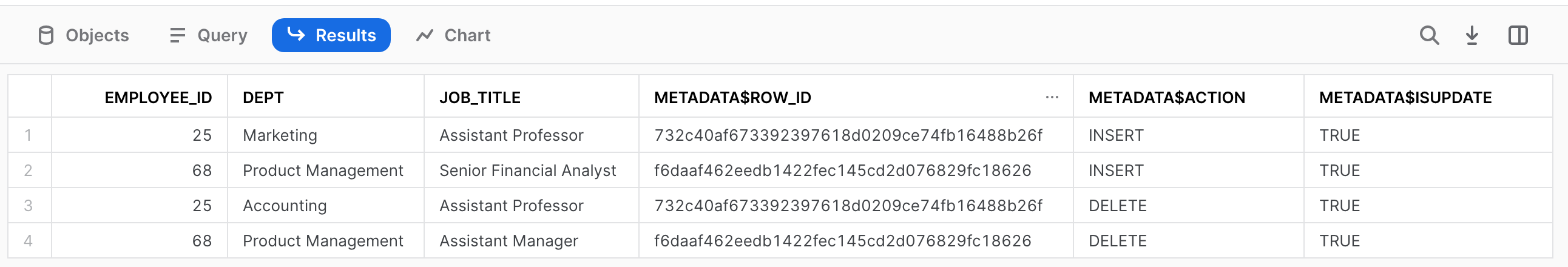

UPDATE <table_name> SET JOB_TITLE = 'Senior Financial Analyst' WHERE EMPLOYEE_ID = 68;The result should look like this:

- Sign up as a member of Frosty Friday. You can do this by clicking on the sidebar, and then going to ‘REGISTER‘ (note joining our mailing list does not give you a Frosty Friday account)

- Post your code to GitHub and make it publicly available (Check out our guide if you don’t know how to here)

- Post the URL in the comments of the challenge.

If you have any technical questions you’d like to pose to the community, you can ask here on our dedicated thread.

33 responses to “Week 2 – Intermediate”

-

Targeting specific fields was a nice touch.

-

Reorganised my submission repo, new master solution URL here.

- Solution URL – https://github.com/ChrisHastieIW/Frosty-Friday

-

-

Refreshed the Concept of

1. INFER_SCHEMA retrieves and returns the schema from a set of staged files.

2. GENERATE_COLUMN_DESCRIPTION returns the list of columns necessary to create a table, external table, or view.

3. CREATE TABLE … USING TEMPLATE expands upon Snowflake’s CREATE TABLE functionality to automatically create the structured table using the detected schema from the staged files with no additional input.- Solution URL – Solution URL: https://github.com/sachingithubaccnt/Frosty_Friday/blob/main/Week2_Intermediate.sql

-

A two-step solution this time using dbt

Use the macro from week 1 to create a new stage with parquet filetype.

Create a [table](https://github.com/dsmdavid/frostyfridays-sf/blob/main/models/challenge_02.sql) from the file.

Create a view with the desired columns to track.

Use a post-hook in the view to…

1. create the stream.

2. update the underlying table.And the view & stream:

https://github.com/dsmdavid/frostyfridays-sf/blob/main/models/challenge_02_v.sql -

great material to learn about stream

- Solution URL – https://github.com/yohei1126/frosty-friday/blob/main/WEEK2.sql

-

Loading the local file is a bit tricky, interesting to see different ways of doing it. Also learnt about using a view to track limited column, that’s pretty neat.

- Solution URL – https://github.com/zlzlzl2-data/FrostyFriday/blob/main/FF2_LZ.sql

-

Late to the party. First time I used stream had to read up on several resources. I liked the View use from several users here so updated my final submission to use that. Thanks for the learning opportunity.

- Solution URL – https://github.com/ArnoJissink/FrostyFriday/blob/main/Week2_Intermediate_Stream_parquet

-

Fun!

- Solution URL – https://github.com/scallybrian/bs-frosty-sf/tree/main/frostyfridays/models/challenge_002

-

Few issues with trying to use a table stage, so reverted back to just using a named stage to load the file into.

- Solution URL – https://github.com/ChrisBBiztory/FrostyFriday/blob/main/Week_2.sql

-

Please see also the attached solution as a blog: https://theinformationlab.nl/en/2022/08/19/developing-snowflake-skills-2/

- Solution URL – https://github.com/Atzmonky/snowflake/blob/main/ch2_streams.sql

-

Nice challenge!

- Solution URL – https://github.com/pekka-kanerva/frosty-friday/blob/main/week2/ff-week2-solution.sql

-

Streams on selective first led me create a new table but then i thought view would be best

- Solution URL – https://github.com/ankitsr92/Ankit_Work/blob/main/FrostyFriday/Week1/Week2.sql

-

Thanks Atzmon, I had a look at your code. Had one error though, which I solved by specifying the file upon copy into.

- Solution URL – https://github.com/vdharst/Frosty_Fridays/blob/main/week_2.sql

-

Great way to start to conceptualise streams and how they work.

-

Solution URL

-

Solution URL updated

- Solution URL – https://github.com/jameskalfox/frosty-friday-snowflake-challenges/blob/main/Week_02.sql

-

-

Enjoyed this one!

- Solution URL – https://github.com/apd-jlaird/frosty-friday/blob/main/week_2/ff_week_2.sql

-

Good question. Limiting the columns to be tracked is a bit tricky.

- Solution URL – https://github.com/indigo13love/FrostyFriday/blob/main/week2.sql

-

Tricky challenge as I hadn’t used streams before but got there in the end

- Solution URL – https://github.com/BenAConnor/Frosty_Friday/blob/main/Week%202%20Solution.sql

-

Nice intro to streams 🙂

- Solution URL – https://github.com/alaishaa/frostyfridays/blob/35c1d68348ff04e210e99aad6aae7baafbf37416/Week2/Week2.sql

-

Love the challenges so far!

- Solution URL – https://github.com/canonicalized/FrostyFriday/blob/main/WEEK2.sql

-

Here’s my solution

https://github.com/CSimonini/Frosty_Friday/blob/main/W02_Int_Streams.sql

Leave a Reply

You must be logged in to post a comment.